Platform Capabilities

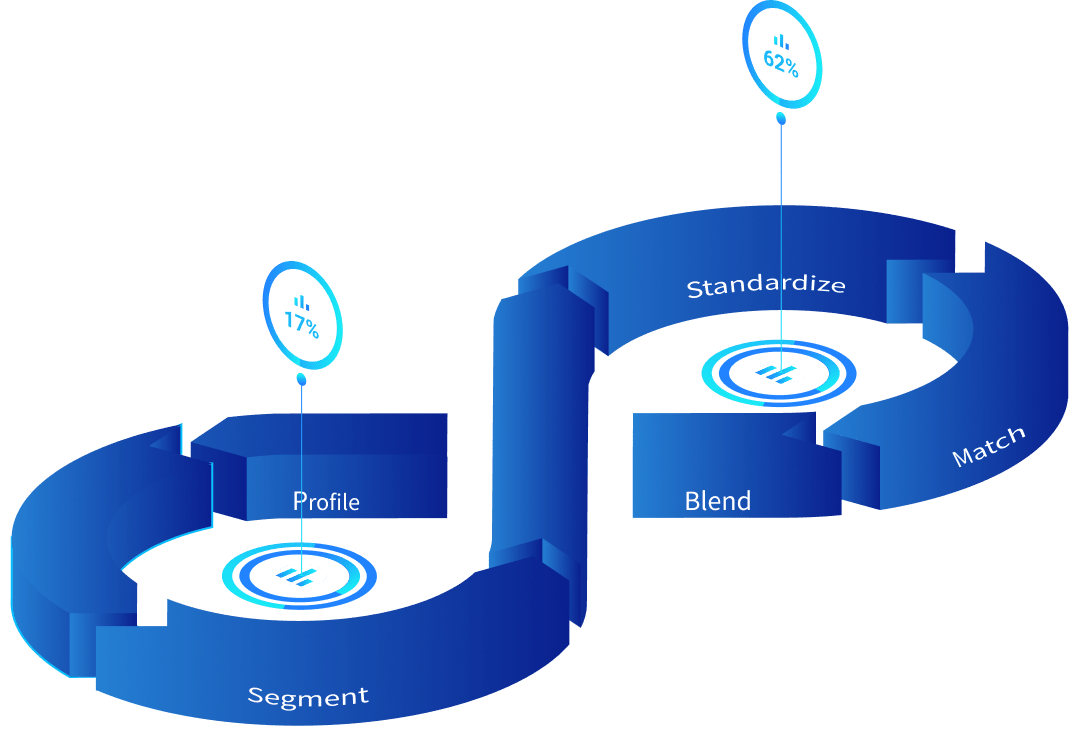

Spin Up

Aggregate

Bring together critical internal data or external data.

Analyze

Collaborate on data projects with both ad-hoc and repeatable, automated analysis.

Enrich

Produce new or improved data sets to feed risk, compliance, or new data products.

Operationalize

Data Refinery delivers significant value to large enterprises

Clients of Data Refinery can…

Key Platform Outcomes

Accelerate

Data Refinery accelerates project timelines by streamlining your teams' access to critical data.

- Enables projects to start faster with automated schema inference and faster loading of data sources.

- Start new data projects and begin improving data quicker via our user-friendly interface.

- Connect existing data tools with easy integration for rapid access to critical data.

Orchestrate

Data Refinery orchestrates technical wins to provide quick, trusted, and efficient outcomes to the firm.

- Scales up and down dynamically to support processing needs while controlling costs.

- Orchestrates, via templates, efficient solutions to common industry and data problems.

- Supports governance, data re-use, and project collaboration across the enterprise.

- Deploys to your AWS environment, giving you more control over your data and cloud spend.

Improve

Data Refinery improves and protects the end-to-end usefulness and value of the firm’s critical data.

- Improve critical data with purpose-built enrichment and data remediation capabilities.

- Automates repetitive and laborious data science, engineering, and reporting activity with background tasks to increase the value of your data experts' focus.

- Increases the usefulness of critical data and safeguards sensitive data with built-in data governance.

- Data Refinery’s available services bring real, hands-on industry expertise and data refinement skill sets to your critical data project.

Industry Challenges

Data for Risk & Regulatory Compliance

New regulations, increased regulatory scrutiny, and new risks are exposing gaps, inaccuracies, and deficiencies in the management of the data required to manage risk and comply with regulations.

With Data Refinery, enterprises are able to leverage, improve, and expand critical data to achieve the “regulatory-grade data” required to meet regulatory standards and mitigate risk.

Transformation & Modernization Programs

Businesses are transitioning legacy applications and operations to modern technology architectures to take advantage of the cloud, leverage data more, and take advantage of Al, to drive business value and improve operational resiliency.

With Data Refinery, enterprises can quickly migrate legacy data and processes, build out next-generation data pipelines, and automate data analytics and management to power next-generation business products and services.

Data as a Product

Large enterprises are sitting on a treasure trove of data that can add significant value to clients and critical business processes, but the data is difficult to leverage and is largely untapped in its ability to be used.

With Data Refinery, enterprises can quickly assemble new data sets, augment and enrich the data, and develop new data assets to be integrated into business processes and new products.

Data for AI

Organizations are leveraging Al more and more to drive new value, yet the data feeding AI models requires active management to improve the reliability and usefulness of the Al models.

With Data Refinery, organizations can quickly experiment, expand, and improve the data to drive more value from Al investments.

Financial services regulators expect that to effectively manage risk, financial institutions and securities firms must have mature quality assurance practices in place to manage adherence to regulations and an institution’s policies and controls. Regulators are asking for access to the data flowing throughout the business to allow them to examine the bank’s controls from AML & KYC, to credit and operational risk, to liquidity, transactions, and resiliency. Foundational to their examinations, regulators are improving their skills, tools, and techniques to look more broadly and more deeply at the critical data elements in use, in addition to interviews and traditional examination techniques. The result: banks are prioritizing modernization initiatives to enhance controls, data quality and their use of data enhance controls.

In 2023, Kingland partnered with DIACSUS to survey executives from the world’s top banks, investment firms, and data suppliers about their business and data priorities. While business priorities may vary institution to institution, these executives all agreed to one common priority across their most important initiatives in 2024: Critical Data. Rather than focusing on the growing volumes of data throughout the enterprise, these executives have realized that 2024 is a year of focus, but as we enter 2024, their focus isn’t necessarily on managing all of the data flowing through their enterprise.

In the world of financial services data, clients and products are two of the most important categories of data. A client or product record may be a simple as a handful of attributes, or as complex as 1,000 attributes to describe all of the characteristics of a client. When we focus on classification data, we are focused in on the data attributes used to group, compare, contrast, and represent the same qualities or characteristics for a specific entity or product. Put another way, classifications typically answer two types of questions:

-

What is the entity or product?

-

What are we allowed to do with the entity or product?

Every transaction a financial institution completes traces back to some sort of account, and inevitably, a client or many clients. Client and account data continues to be the lifeblood of banking and capital markets activity, required for operational processes, critical for risk monitoring, and mandated by regulators. As new risks emerge and new regulations are enacted, many times 1 to 2 simple attributes are missing from customer and account information. Additionally, as a customer relationship expands from the products and services they use today, to new products and services, another 1 to 2 simple attributes are missing. The problem of missing customer and account information may seem fairly straightforward, but when the largest institutions have tens or hundreds of millions of customers, even just one simple attribute can be a $10 million problem.

Everyone is investing in AI. The ability to automate and improve so many business processes makes AI a game changer for most businesses. Advancements in generative AI have simplified the process to experiment and many firms are running pilot programs, proof of concepts, and full-blown initiatives to roll out AI applications to their business unit or enterprise.

A problem is lurking though: reference data. While the most useful predictive and generative AI models will leverage whatever data you feed them, if the reference data within those data sets is not fit for purpose, the models simply won’t perform.